Introducing Retrieval Augmented Generation (RAG) to Enhance AI Capabilities

Introduction

Large Language Modelds (LLMs) like ChatGPT, Llama, Mistral and many others have become cornerstones due to their ability to understand and generate human-like text. These models have revolutionized how machines interact with information, offering unprecedented opportunities across a range of applications. However, despite their capabilities, LLMs come with notable limitations, including:

Static Knowledge Base

LLMs are typically trained on a fixed dataset that may consist of a snapshot of the internet or curated corpora up to a certain point in time. Once training is complete, their knowledge base becomes static.

- Outdated Information: Because the models do not update their knowledge after training, they can quickly become outdated as new information emerges and societal norms evolve.

- Inability to Learn Post-Training: Without continuous updates or further training, LLMs cannot adapt to new data or events that occur after their last training cycle. This limits their ability to provide relevant and current responses.

Factuality Challenges

Factuality in LLMs relates to the accuracy and truthfulness of the information they generate. LLMs often struggle with maintaining a high level of factuality for several reasons:

- Confidence in Incorrect Information: LLMs can generate responses with high confidence that are factually incorrect or misleading. This is because they rely on patterns in data rather than verified facts.

- Hallucination of Data: LLMs are known to hallucinate information, meaning they generate plausible but entirely fabricated details. This can be particularly problematic in settings that require high accuracy such as medical advice or news reporting.

- Source Attribution: LLMs do not keep track of the sources of their training data, making it difficult to trace the origin of the information they provide, which complicates the verification of generated content.

Scalability Concerns

Scaling LLMs involves more than just handling larger datasets or producing longer text outputs. It encompasses several dimensions that can present challenges:

- Computational Resources: Training state-of-the-art LLMs requires significant computational power and energy, often involving hundreds of GPUs or TPUs running for weeks. This high resource demand makes scaling costly and less accessible to smaller organizations or independent researchers.

- Model Size and Management: As models scale, they become increasingly complex and difficult to manage. Larger models require more memory and more sophisticated infrastructure for deployment, which can complicate integration and maintenance in production environments.

- Latency and Throughput: Larger models generally process information more slowly, which can lead to higher latency in applications that require real-time responses. Managing throughput effectively while maintaining performance becomes a critical challenge as the model scales.

These challenges underscore the limitations of current LLMs and highlight the necessity for innovative solutions like Retrieval Augmented Generation (RAG), which seeks to address these issues by dynamically integrating up-to-date external information and improving the model’s adaptability, accuracy, and scalability.

What is Retrieval Augmented Generation?

Introduced by Meta, Retrieval Augmented Generation (RAG) represents a solution designed to overcome these shortcomings. At its core, RAG is a hybrid model that enhances the generative capabilities of traditional LLMs by integrating them with a retrieval component. This integration allows the model to access a broader and more up-to-date pool of information during the response generation process, thereby improving the relevance and accuracy of its outputs.

How RAG Works

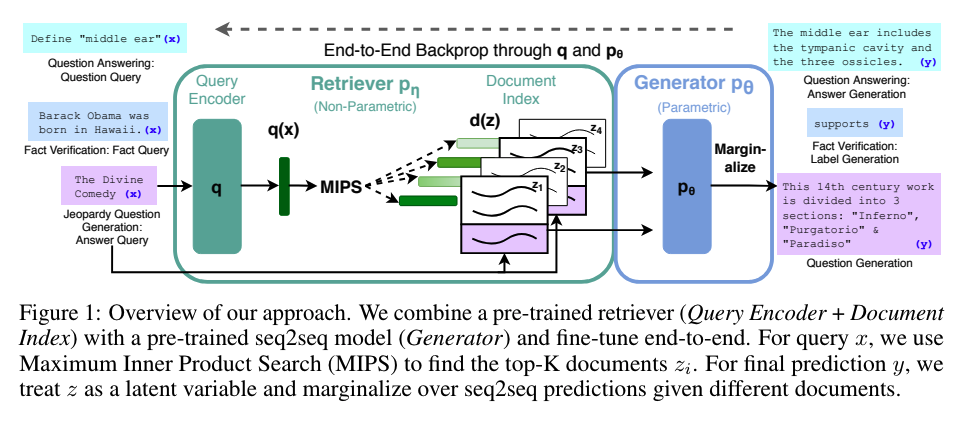

The architecture of a typical RAG system combines two main components: a retrieval mechanism and a transformer-based generator. Here’s how it functions:

- Retrieval Process: When a query is inputted, the RAG system first searches a vast database of documents to find content that is most relevant to the query. This process leverages techniques from information retrieval to effectively match the query with the appropriate documents.

- Generation Process: The retrieved documents are then fed into a transformer-based model which synthesizes the information and generates a coherent and contextually appropriate response. This step ensures that the output is not just accurate but also tailored to the specific needs of the query.

Embeddings: RAG’s Secret Sauce

In Retrieval Augmented Generation (RAG) systems, embeddings play a crucial role in enabling the efficient retrieval of relevant documents or data that the generative component uses to produce answers. Here’s an overview of how embeddings function within a RAG framework:

What are Embeddings?

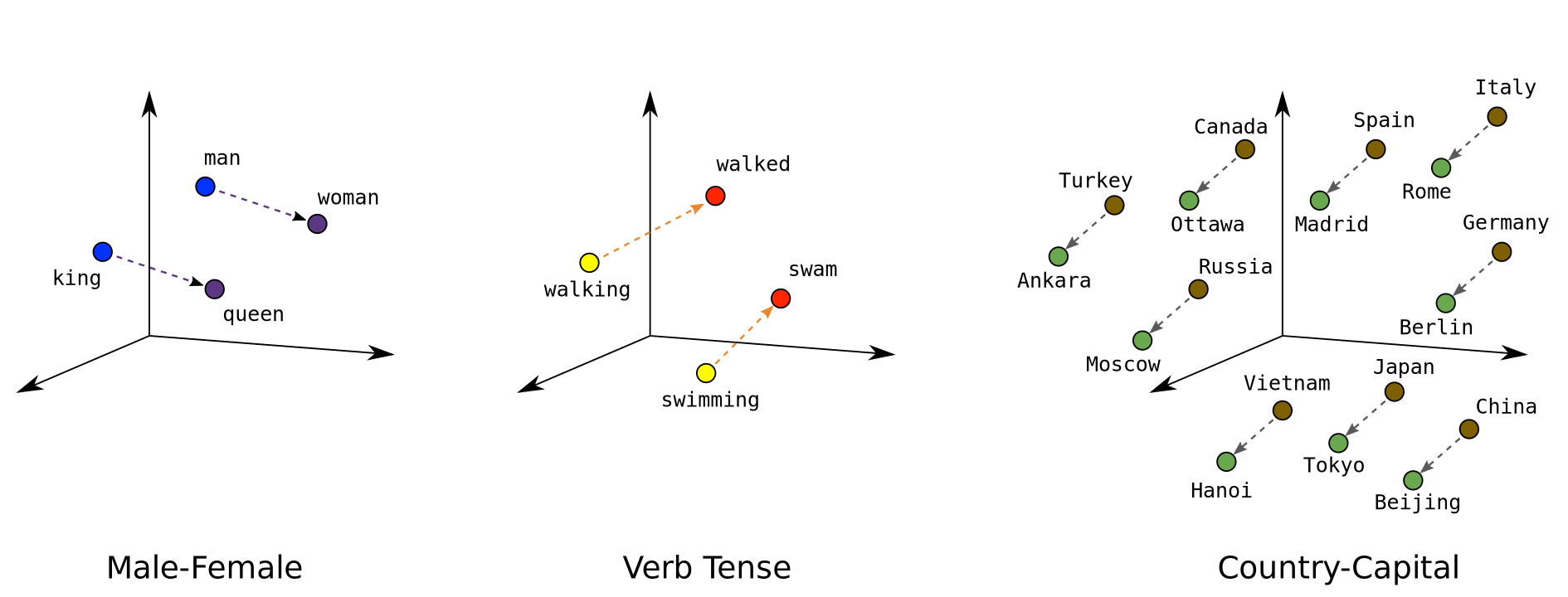

Embeddings are dense, low-dimensional representations of higher-dimensional data. In the context of RAG, embeddings are typically generated for both the input query and the documents in a database. These embeddings transform textual information into vectors in a continuous vector space, where semantically similar items are located closer to each other.

Role of Embeddings in RAG

-

Semantic Matching: Embeddings enable the RAG system to perform semantic matching between the input query and the potential source documents. By converting words, phrases, or entire documents into vectors, the system can use distance metrics (like cosine similarity) to find the documents that are most semantically similar to the query. This is more effective than traditional keyword matching, which might miss relevant documents due to synonymy or differing phrasings.

-

Efficient Information Retrieval: Without embeddings, searching through a large database for relevant information could be computationally expensive and slow, especially as databases scale up. Embeddings allow the use of advanced indexing and search algorithms (like approximate nearest neighbor search algorithms) that can quickly retrieve the top matching documents from a large corpus, making the process both scalable and efficient.

-

Improving the Quality of Generated Responses: By retrieving documents that are semantically related to the query, the generative component of the RAG system has access to contextually relevant and rich information. This helps in generating more accurate, detailed, and contextually appropriate answers. Embeddings ensure that the retrieved content is not just relevant but also enhances the generative process by providing specific details or factual content that the model can incorporate into its responses.

-

Continual Learning and Adaptation: In some advanced implementations, embeddings can also be dynamically updated or refined based on feedback loops from the system’s performance or new data, enhancing the model’s ability to adapt over time. This can be crucial for applications in rapidly changing fields like news or scientific research.

Technologies and Tools for Embeddings in RAG

Several technologies facilitate the creation and management of embeddings in a RAG system. Libraries like FAISS, Annoy, Sentence Transformers and HNSW are popular for building efficient indexing systems that support fast retrieval operations on embeddings. Machine learning frameworks such as TensorFlow and PyTorch, along with models from Hugging Face’s Transformers library, are commonly used to generate embeddings from textual data.

Benefits of RAG

RAG addresses several limitations of LLMs effectively:

- Dynamic Knowledge Integration: Unlike traditional LLMs, RAG can pull the most current data from external sources, ensuring responses are not only accurate but also timely.

- Enhanced Accuracy and Relevance: By using real-time information, RAG considerably improves the quality of responses in terms of factuality and relevancy.

Use Cases and Applications

RAG has found practical applications in several fields:

- Customer Support: Automating responses to user inquiries by providing precise and updated information.

- Healthcare: Assisting medical professionals by quickly retrieving medical literature and patient data to offer better diagnostic support.

- Legal Advisories: Enhancing the preparation of legal documents and advices by accessing and integrating the latest legal precedents and regulations.

Getting Started with RAG

For those interested in implementing RAG, there are several tools and frameworks available, both proprietary and open source. For any RAG implementation there will be two main components:

-

Embeddings: raw text that you want to index needs to be converted to embeddings. There are proprietary options from OpenAI, Cohere, Anthropic and other providers. In the open source world, Sentence Transformers and FAISS provide out-of-the-box functionality allowing to use open source Large Language Models to encode a text dataset into a set of embeddings.

-

Vector Databases: once text is converted to embeddings, you will need to store it in a Vector Database. Ideally, this database will expose functionality to index these embeddings and to perform semantic search on top of them. Common algorithms for semantic search are Cosine Similarity and ANN. There are many different techniques and strategies for the retrieval stage and this is something that needs to be optimized depending on the use case. There are many different options in terms of Vector Database stacks both proprietary and open source, such as:

- Qdrant

- Milvus

- Weaviate

- ChromaDB

- Postgresql + pgvector

In next chapters, we’ll dive deep into the following RAG topics:

- Different RAG strategies

- Vector DB options & benchmarks

- Practical RAG use cases

Stay tuned!