Introduction

I have been tinkering with LLMs at work and outside now for quite a while and one of the most pressing issues compared to traditional machine learning is the unsolved problem of how to evaluate them. Evaluating LLM outputs is exponentially more difficult than evaluating a classification problem.

A classification problem deals with discrete outputs in the form of a label. There is a very finite set of outcomes in which to evaluate on.

Let’s say you have two classes: birds and trees. You try to see how many fall into each of the classes correctly and incorrectly. No problem. Confusion matrix and depending on the class imbalance may be a different metric, but in general they constitute a set of already defined metrics that can be applied.

Now, think about the evaluation of an LLM output, which is a text string. Unfortunately, not so well defined. There are classical metrics such as BERT score, but they need a clearly defined ground truth dataset which is often hard to come by at the beginning stages of a project, due to missing data integration.

Let’s imagine I just want to ask the LLM to summarize an input given by my business unit. Many of the current notions fall short when applying them to actual business problems.

For a metric to be useful, it needs to be:

- Specific enough to inspire changes that optimize the system, i.e. I need to be able to observe patterns from its output easier than when I as an engineer read through the summaries.

- Indicative of the direction of change after applying the design change to the system - i.e. it shows me that now I do better or worse with respect to something I care about.

Now one could go with an LLM as a judge. But this comes with its own issues: they are known to be biased in their scoring, and thus are sometimes unaligned with the notions of user feedback. Other people have written more extensively on this for example in this blog post. This points you to a really great article around LLM as a judge and its limitations.

In summary, issues usually arise from failure to assign continuous scores reliably, as LLMs show bias towards certain numbers (42…). These are clearly artifacts that stem from the training data. Other recommendations are to specify clear scoring rubrics, and providing reasoning steps - which in general speaks to the worst of it. More specifically for practitioners, an LLM as a judge is just another prompt to engineer and optimize, which with all love for the LLM any user will tell you is a brittle endeavour in itself.

An example of such a prompt is shown below. When used with GPT-4, it shows a modest correlation of 0.6 compared to human judgement. Depending on your goal, this is quite a discrepancy. What is worse is that it is certainly not specific enough to indicate what needs to be changed. Unless a Data scientist sits down and maps the context to the summaries for low scoring examples.

At best, this is a lot of work; at worst, a fruitless endeavour in case the metric picks up on undesired issues. This is likely if the definition of the prompt is not inspired by what the business actually wants to see.

You can see that you are asking a model that essentially has no memory to keep facts in mind, comparing the context used to generate the summary, and simultanously computing a score for a task on which essentially, it was never explicitly trained on.

That is a lot to ask from an LLM, and is often rooted in a confusion of LLMs being able to reason, which has been discounted as well. LLMs can be considered general language computation engines, nothing more and nothing less.

class LLMJudge(dspy.Signature):

"""

You will be given a <source_text>. You will then be given one <summary> written for this <source_text>. Your task is to rate the <summary> on one metric.

Please make sure you read and understand these instructions carefully.

Please keep this <source_text> open while reviewing, and refer to it as needed.

Evaluation Criteria:

<faithfulness> (1-5) - the relative amount of facts the <summary> contains compared to the source text.

A faithfull <summary> contains the maximum number of facts contained in the <source_text>.

Annotators were also asked to penalize summaries that contained relatively less facts.

Evaluation Steps:

1. Read the news <source_text> carefully and identify the main facts and details it presents.

3. Read the <summary> and compare it to the <source_text>. Check how many facts the <summary> contains compared to the <source_text>.

3. Return a score for <faithfulness> based on the Evaluation Criteria and return as <faithfulness>

<source_text>:

<summary>:

<faithfulness>:

"""

source_text = dspy.InputField()

summary = dspy.InputField()

faithfulness = dspy.OutputField()

I personally believe that evaluation is quite specific to the use case. But if one thinks about the failure modes of use cases, by grouping them by their expected output, one can derive specific measures that can be useful in a general setting.

In my first post I want to talk about a pattern I found useful when evaluating LLM generated outputs that often go beyond a simple answer but are complex aggregates of inputs. This pattern came when observing the specifics of the summarization problem from a Birds Eye’s view.

Let’s look at the specifics of the problem:

- LLMs produce non-deterministic output. Hence every time I ask my LLM, that answer might change. This problem is intensified the more things I am asking from my LLM at once.

- When I specify things in my prompt that are important to the business I can theoretically trigger a hallucination. Each of these questions can lead to a hallucination, where despite the information not being present in the input, the LLM answers based on the prompt, but not the context passed.

- Each of the summary has some specific expectation, based on the input and the specification coming from the business.

- Now if you think about the basic unit of information that makes a summary useful or not, we are talking about a **fact**. A fact the business cares about is either included or excluded. Or in case of a hallucination, that fact is superfluous.

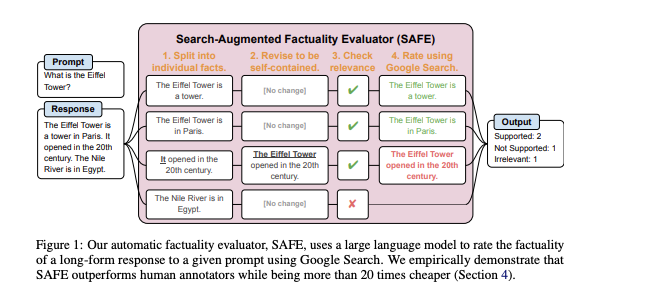

There are already papers dabbling in this notion, most notably this one, where the approach named SAFE has an extension that looks for the facts on the internet with an agentic workflow. While this is not necessary for the majority of tasks, it is a nice way to think about in terms of extensions. What we are more interested in here is the decomposition of a piece of text into its individual facts. As is shown in the below figure.

Given a few examples, the prompt primarily asks the model to split the sentence, which can be an LLM generated answer in a RAG system, or a summary of its component facts. With a few modifications, we can implement this prompt in DSPy. To achieve this, we use a typed predictor, making sure that we get in fact a list of facts back. This mitigates another inherent issue in working with LLMs. The downstream application - in our case, the metric computation - expects a list of facts it can check for, but there is never a guarantee that the formatting succeeds and thus the output can be consumed by the downstream application.

Inspired by all these observations, there seems to be a way to evaluate an LLM output based on expected facts. In some settings, this is a viable option. In most of these settings, it relates to understanding your business requirements thoroughly and collecting them.

A good example would this blog post. The idea here would be to summarize the blog post while maintaining the important information. In the context of a business unit for example, I define important “facts” as information around measuring LLMs outputs. And I will use the sections of the blog post above this paragraph as inputs to a summarization step. The simplest form is to use a small dspy Summarizer module. For the sake of reproducibility, we show it here - it is not optimized, so nothing much was done.

import dspy

class Summarizer(dspy.Signature):

"""

You will be given a <source_text> which you have to summarize

keeping all important details so that we still have all facts of the original <source_text>

and show them as bullet points.

"""

source_text = dspy.InputField()

summary = dspy.OutputField()

The output summary is this:

- Evaluating Language Learning Models (LLMs) is a complex issue due to their non-deterministic output and the difficulty in defining a clear ground truth dataset.

- Traditional metrics such as the Bert score are not always applicable, especially in the early stages of a project.

- A useful metric needs to be specific enough to inspire system changes and indicate the direction of change after applying a design change.

- Using an LLM as a judge has its own issues, including bias in scoring and a lack of alignment with user feedback.

- The author suggests a new approach to evaluate LLM output based on expected facts, which requires a thorough understanding of business requirements.

- This approach involves decomposing a piece of text into its individual facts and checking for their presence or absence in the LLM output.

- The author also highlights the risk of “hallucination”, where the LLM generates an answer based on the prompt, but not the context passed.

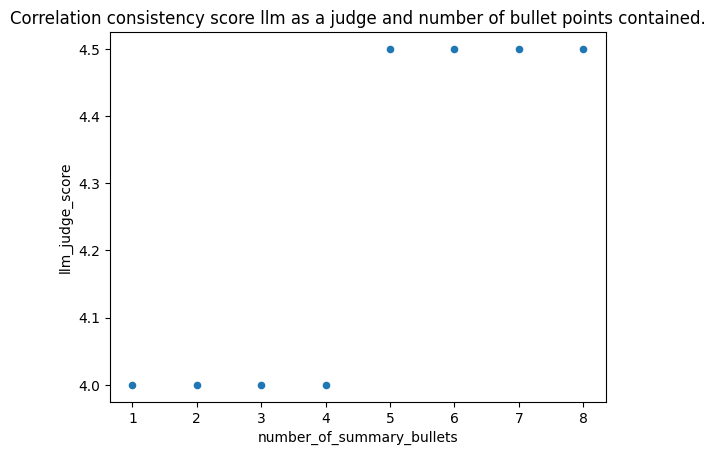

- The author suggests using a tool like DSPy for implementing this approach. Now lets imagine an experiment where I remove a bullet point successively. I would expect that the LLM as a judge shown above would at least somewhat represent this gradual loss of information. The scores of this experiment where I use the prompt of the LLM as a judge above are shown in the below screenshot.

LLM as a judge faithfulness score plotted against number of bullets

Now, let’s look at an example implementation of the proposed metric using DSPy. First we need to extract atomic facts, using the KeyFactExtraction module shown below.

import dspy

from pydantic import BaseModel, Field

class KeyFactExtractorOutput(BaseModel):

key_fact_list: list[str] = Field(description="A list of key fact mentioned in the text.")

class KeyFactExtractorInput(BaseModel):

text: str = Field(description="The text to use for extracting key facts.")

class KeyFactExtraction(dspy.Signature):

"""

Instructions:

1. You are given a <keyfactor_input>. Your task is to break the <keyfactor_input> down into a list of atomic facts.

2. An atomic fact is a sentence containing a singular piece of information.

3. All atomic facts should be added to a list.

5. You should only output the complete list as <key_fact_output>.

6. Your task is to do this for <keyfactor_input>.

7. After collecting the <key_fact_output> check if there are duplicates and keep only the one with

the most information.

"""

facts_input: KeyFactExtractorInput = dspy.InputField()

facts_output: KeyFactExtractorOutput = dspy.OutputField()

factextractor = dspy.TypedPredictor(KeyFactExtraction)

# Where the blogpost is the string representation of the blogpost ending with: post above this paragraph as input to a summarization.

key_facts_blogpost = factextractor(facts_input=KeyFactExtractorInput(text=blogpost))

This results in us getting a list of facts that we can check against the summary. What is great about this is that we have now a set of facts that were retrieved by asking the LLM to check for facts as a singular task. Which is also another task, just like summarizing the input. So when our main goal is to maintain as many facts as possible, this is the specific issue that the LLM should solve in this step.

['LLM Evaluation requires knowing what you want to know.',

'Evaluating LLM outputs is more difficult than evaluating a classification problem.',

'Classification problems deal with discrete outputs in the form of a label.',

'Evaluation of an LLM output, which is a text string, is not well defined.',

'Classical metrics such as Bert score need a clearly defined ground truth dataset.',

'For a metric to be useful, it needs to be specific enough to inspire changes and indicate the direction of change.',

'LLMs as a judge come with issues such as bias in scoring.',

'An example of a prompt used with GPT-4 showed only a .6 correlation with human judgement.',

'LLMs produce non-deterministic output.',

'Specifying things in the prompt that are important to the business can trigger a hallucination.',

'Each summary has some specific expectation based on the input and the specification coming from the business.',

'The basic unit of information that makes a summary useful is a fact.',

'The approach named SAFE looks for the facts on the internet with an agentic workflow.',

'The prompt primarily asks the model to split the sentence into its component facts.',

'There seems to be a way to evaluate an LLM output based on expected facts.']

Now, let’s use these facts to evaluate the summary. This means we iterate through the list of facts, check whether it is contained (FactChecker module below) and compute the ratio of facts that is present with respect to the entire set of facts. The one thing to be careful about in the next step is that this might involve a lot of computation, thus using a relatively cheap LLM is probably a good idea in the long run - an entailment check does not need GPT-4o, but we can certainly do with cheaper models.

class FactCheckerInput(BaseModel):

text: str = Field(description="The text to to evaluate if the fact was correctly included.")

fact: str = Field(description="The fact to check for")

class FactChecker(dspy.Signature):

"""

Check if the fact is mentioned in the text.

If the fact is mentioned than return True else return False

"""

Text: FactCheckerInput = dspy.InputField()

Output: bool = dspy.OutputField()

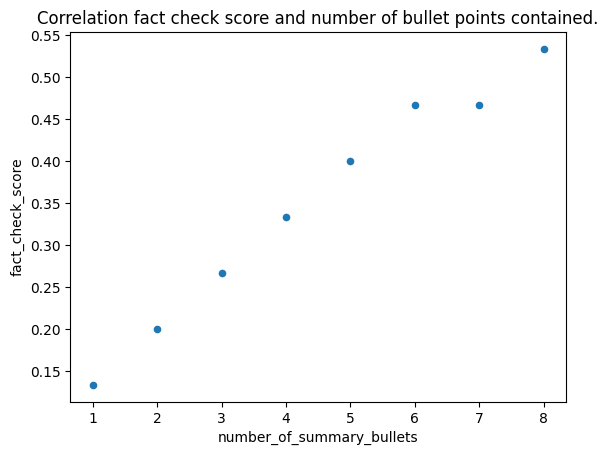

Comparison of LLM as a judge faithfulness score plotted against number of bullets vs. the Fact Check metric

One observation that holds true for any LLM as a judge is that there seems to be a saturation and difficulty to truly assign meaningful continuous or even discrete scores. This can clearly be seen in the image below, where we used the LLM judge on the same data as the Factcheck metric (strictly speaking a decomposed LLM as a judge).

This is now the final step, where we run the experiment from above using the FactCheckerMetric.

This is basically a computation of a ratio of entailed facts, with respect to the complete set of facts. I.e.

if we identify 50% of the facts being entailed in the summary then the

score would be 0.5 based.

And voila, we see.

- A distribution of scores that is much more sensible. I.e. we see a monotonic decline aligned with the decline in information. While not perfect definitely better than the LLM as a judge.

- We can rely on the judgement as we are asking a much more decomposed set of things from the LLM.

- And probably most importantly, we have some amount of explainability as we have a reason as to which facts are missing, which we can use to improve our model if we decide to do so.

[(False, 'LLM Evaluation requires knowing what you want to know.'),

(True, 'Evaluating LLM outputs is more difficult than evaluating a classification problem.'),

(False, 'Classification problems deal with discrete outputs in the form of a label.'),

(True, 'Evaluation of an LLM output, which is a text string, is not well defined.'),

(True, 'Classical metrics such as Bert score need a clearly defined ground truth dataset.'),

(True, 'For a metric to be useful, it needs to be specific enough to inspire changes and indicate the direction of change.'),

(True, 'LLMs as a judge come with issues such as bias in scoring.'),

(False, 'An example of a prompt used with GPT-4 showed only a .6 correlation with human judgement.'),

(True, 'LLMs produce non-deterministic output.'),

(False, 'Specifying things in the prompt that are important to the business can trigger a hallucination.'),

(False, 'Each summary has some specific expectation based on the input and the specification coming from the business.'),

(False, 'The basic unit of information that makes a summary useful is a fact.'),

(False, 'The approach named SAFE looks for the facts on the internet with an agentic workflow.'),

(False, 'The prompt primarily asks the model to split the sentence into its component facts.'),

(True, 'There seems to be a way to evaluate an LLM output based on expected facts.')]

For example here we see that facts regarding the prompt was not maintained in the summary. We might hence add some part to the summarization prompt that specifically asks to maintain modelling related information given that we are an NLP expert.

class Summarizer(dspy.Signature):

"""

You are a datascientist with knowledge in NLP.

You will be given a <source_text> which you have to summarize

keeping all important details as well as modeling related information intact.

We want to still have all facts of the original <source_text> and show them as bullet points.

"""

source_text = dspy.InputField()

summary = dspy.OutputField()

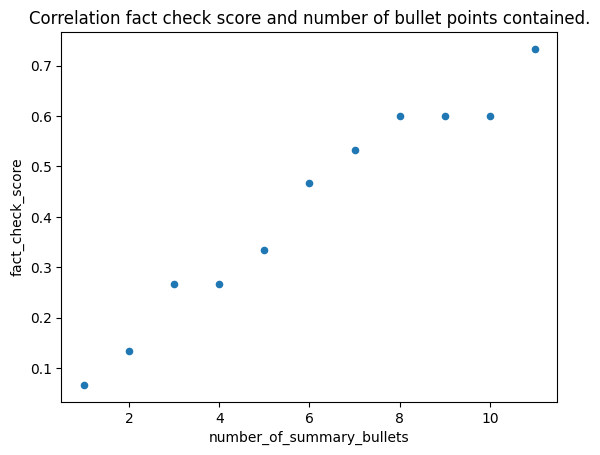

This prompt change improves the fact check score to 0.73 from 0.53, i.e. with a simple general change to the prompt we can extract 20% more facts than before. Applying this to a larger dataset would enable us to see whether we on average extract more or less of the information contained in the source text. We also see that the design of the metric enables us to look into patterns of failure modes easier and thus improve the prompt with respect to these failure modes.

While I admit this is more costly, evaluating LLMs is not something we can just skip. In my opinion however it is much more costly to depend on an LLM judge that causes havoc in production, because it does not correspond with realistic notions of quality.