Introduction

Let’s face it, Large Language Models are complex. Like in all AI-related applications, model training is just part of the journey: once you build or select a cutting-edge model for provising unique user experience to customers, there are other things to worry about. Prompt Engineering, Chain of Thought design, Retrieval Augmented Generation, LLM Agents, Production Deployment, and so on. If we expand the focus to non-functional requirements, the list goes on: Security aspects, Hallucination, Guardrails, Prompt Injection, and so on.

Given the complexity involved, many companies and researchers have invested into developing no code LLM solutions. In this post, I will provide an overview on some of these tools.

No Code: Motivation

Large Language Model applications are still a bit recent. As such, not a lot of organisations are ready for digging deep into the trenches of fine tuning, Langchain, Prompt Engineering and so on.

No code platforms try to fill this gap, by turning some of these processes into simple graphical workflows. Think of a flowchart tool, but instead of drawing, you can seamleslly define different LLM Chains that are part of a conversational workflow.

This is what these tools aim to achieve - universalizing access to rich conversational experiences, as well as validation, compliance and security tasks.

Flowise

Simply put, Flowise is a Drag & drop UI to build your customized LLM flow.

It is fully open source, which means you can either host it yourself on premise, at your cloud provider or through managed services, such as Restack, Railway or Render.

Components

Flowise has 3 different modules in a single mono repository.

Examples

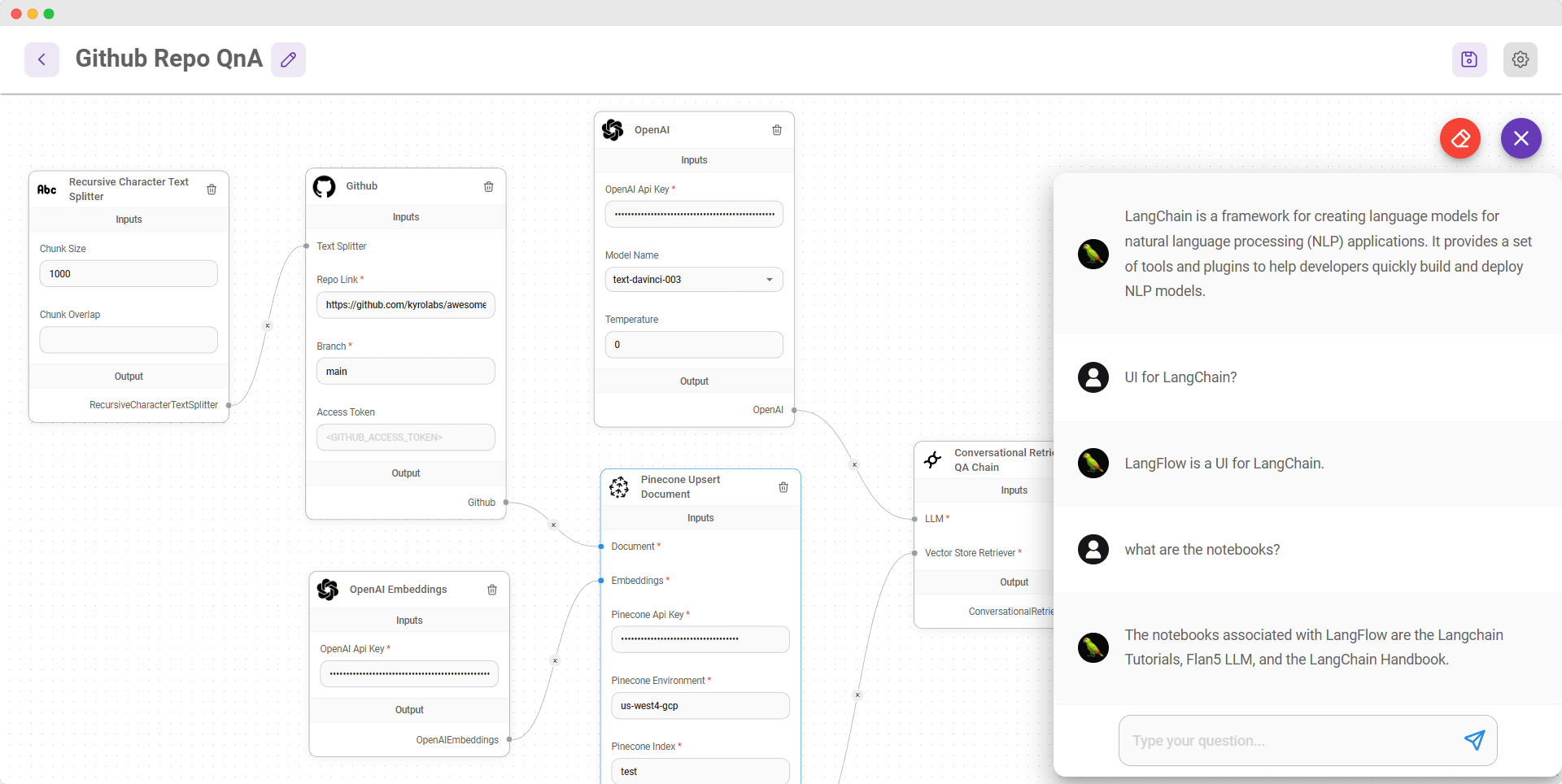

Q&A Retrieval Chain

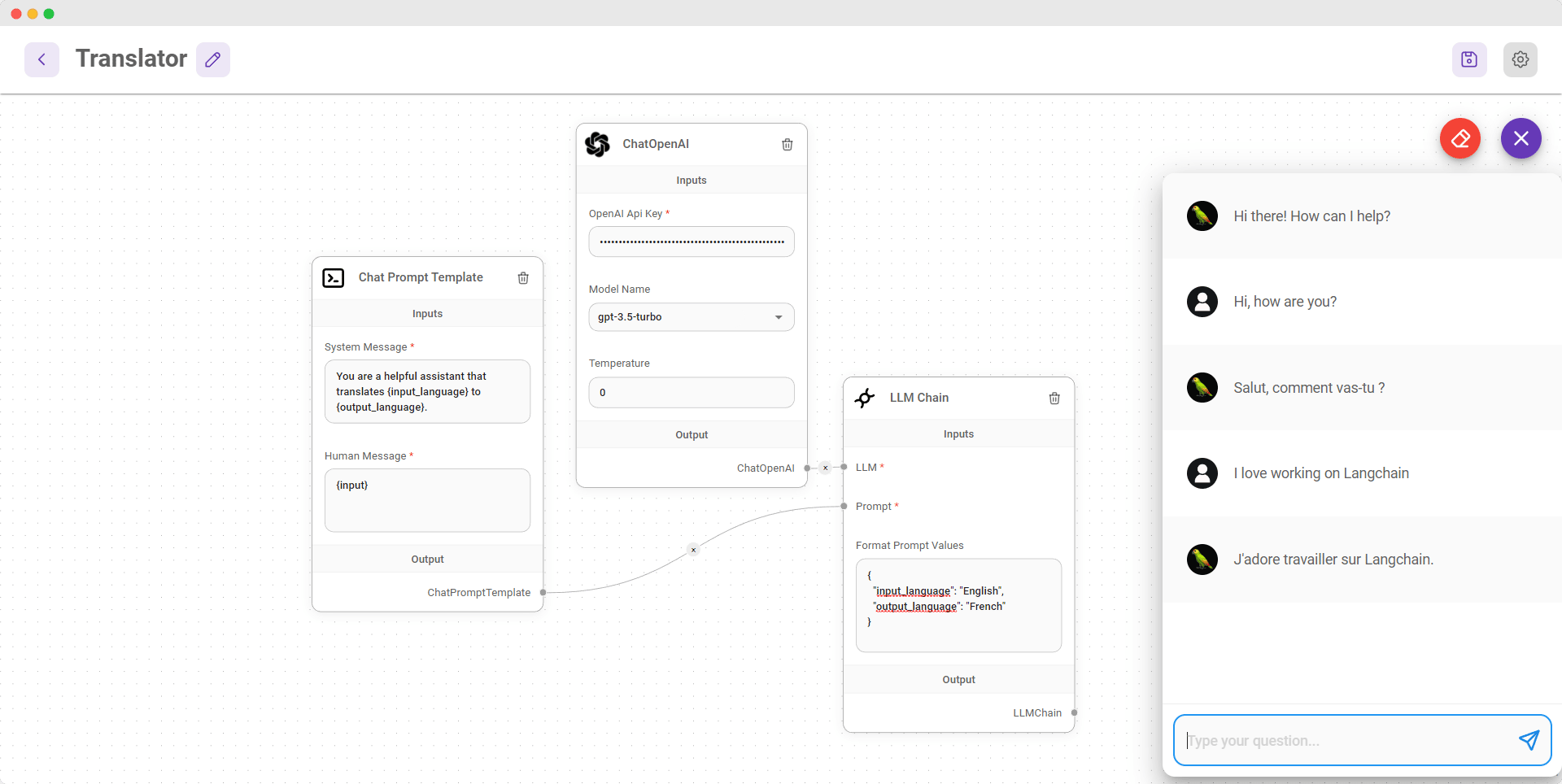

Language Translation Chain

Conversational Agent With Memory

Getting started

Flowise is based on Node.js (minimum 18.15.0). To run it locally or on a Virtual Machine is pretty simple:

- Installation

npm install -g flowise

- Startup

npx flowise start

- With authentication

npx flowise start --FLOWISE_USERNAME=user --FLOWISE_PASSWORD=1234

Once you start it up, Flowise will be available at localhost:3000 by default.

Community

Flowise has close to 17k stars on Github as of October 2023. First version (1.2.13) was released back in June 2023, and since then there have been 12 other releases (latest version is 1.3.7). There are 48 contributors to date, which indicates that the community is pretty active for such a niche scope.

Learn more

Langflow

Langflow is a UI for LangChain, designed with react-flow to provide an effortless way to experiment and prototype flows.

Installation

Locally

You can install Langflow from pip:

# This installs the package without dependencies for local models

pip install langflow

To use local models (e.g llama-cpp-python) run:

pip install langflow[local]

This will install the following dependencies:

- CTransformers

- llama-cpp-python

- You can still use models from projects like LocalAI

Next, run:

python -m langflow

or

langflow run # or langflow --help

Getting Started

Creating flows with Langflow is easy. Simply drag sidebar components onto the canvas and connect them together to create your pipeline. Langflow provides a range of LangChain components to choose from, including LLMs, prompt serializers, agents, and chains.

Explore by editing prompt parameters, link chains and agents, track an agent’s thought process, and export your flow.

Once you’re done, you can export your flow as a JSON file to use with LangChain. To do so, click the “Export” button in the top right corner of the canvas, then in Python, you can load the flow with:

from langflow import load_flow_from_json

flow = load_flow_from_json("path/to/flow.json")

# Now you can use it like any chain

flow("Hey, have you heard of Langflow?")

Learn More

AgentGPT

AgentGPT allows you to configure and deploy Autonomous AI agents. Name your own custom AI and have it embark on any goal imaginable. It will attempt to reach the goal by thinking of tasks to do, executing them, and learning from the results 🚀.

Getting Started

-

Open your code editor

-

Open the Terminal - Typically, you can do this from a ‘Terminal’ tab or by using a shortcut (e.g.,

Ctrl + ~for Windows orControl + ~for Mac in VS Code). -

Clone the Repository and Navigate into the Directory - Once your terminal is open, you can clone the repository and move into the directory by running the commands below.

For Mac/Linux users 🍎 🐧

git clone https://github.com/reworkd/AgentGPT.git

cd AgentGPT

./setup.sh

For Windows users 🪟

git clone https://github.com/reworkd/AgentGPT.git

cd AgentGPT

./setup.bat

- Follow the setup instructions from the script - add the appropriate API keys, and once all of the services are running, travel to http://localhost:3000 on your web-browser.

Learn More

Final Remarks

I found these three tools super cool, not only for designing LLM workflows, but also to learn more about concepts such as prompt engineering, retrieval augmented generation and autonomous agents. Each tool has its own focus and perks - either fully open source (more flexibility and freedom for DYI deployments) and paid options (no hassle in terms of deploying or managing infrastructure).

This is just an initial overview of the current LLM No-Code Tooling space. Stay tuned for updates, and be sure to share your insights in the comments :)